Look, No Hands!

When will self-driving cars be ready for the road? Alumni working in this rapidly developing field weigh in.

The self-driving space is burgeoning thanks, in part, to the innovations of Harvey Mudd alumni. Their experience and expertise across disciplines are invaluable to making the autonomous vehicle (AV) reliable, attainable and safe. Today, Americans drive over three trillion miles per year and the AV industry wants to capture those miles. Two major challenges facing the industry—especially in dense urban landscapes like San Francisco, where many AV enterprises are headquartered—are the AV’s abilities to perceive the road accurately within centimeters and to make split-second decisions.

The holy grail of AV companies is reaching Level 5 in autonomy: totally self-driving vehicles.

To achieve this, AVs must have the right sensors and software to control and navigate driverless in a social and legal climate that is demanding of safety and skeptical that AVs can attain the performance of human drivers.

No company is there yet. All are working furiously to be the first to reach that goal, and Mudd alumni are the problem solvers who stand at the fore of an industry developing at warp speed.

“My sense is that the legal hurdles are much lower than the technological ones,” says Michael Reynolds ’04. Before joining the consumer law section of the California Attorney General’s office in 2017, he worked at the corporate firm O’Melveny & Meyers counseling startups and car makers about legal and regulatory issues with AVs. Noticing a dearth of legal and technical expertise in the field of vehicle automation, he helped establish the firm’s first AV group in 2015.

According to Reynolds, the federal regulators at the National Highway Traffic Safety Administration have taken a hands-off approach to vehicle automation. “The reason we don’t see true self-driving or autonomous vehicles on the roads today is because the technology isn’t safe enough, not because of regulation,” he says.

He points to many potential advantages of AVs, including decreasing traffic deaths, increasing mobility for disabled people and the elderly, reducing congestion and possibly reducing carbon emissions.

“Autonomous vehicles hold a lot of promise, but it’s all a little theoretical right now,” he says.

Can AV companies move beyond promise to profit? Here are some insights from alumni who work in the industry.

Uber

Since 2016, Uber has invested nearly $1 billion in its autonomous vehicle group, bringing on new hires, like HMC alumni, who advance AV technologies to make safe, reliable vehicles.

Eric Huang ’02

Senior machine learning systems engineer, self-driving vehicles

Uber Advanced Technologies Group (ATG)

At Uber since 2017, Huang leverages his training and experience in artificial intelligence and software engineering to build scalable systems that support machine learning for self-driving cars. He thinks about AVs beyond the boundaries of science and technology, viewing them in a broader societal context.

“The scope and immediacy of the impact of self-driving technology on society is one of the most exciting aspects of my work,” he says. “I’m interested in problems that have the potential to disrupt society, so the self-driving industry is a good match for me.”

He is fascinated to witness these impacts firsthand in legal, political and social landscapes— all of which are changing to accommodate the new technologies.

After earning his PhD in computer science at UCLA in 2010, Huang worked in both academia and industry, landing at LinkedIn in 2014 before being recruited by Uber. A musician as well as a scientist and engineer (Huang double majored in computer science and music at Mudd), he’s got an interesting perspective on the industry. “I’m applying artificial intelligence in ways that improve the quality of life responsibly and compassionately,” he says.

Daniel Gruver ’05

Senior program manager, Uber ATG

A research engineer, Gruver innovates new technologies for AV sensing systems. He’s been managing people and projects at Uber since 2016, working on improving the potential benefits to safety and convenience, both paramount to creating this new mode of transportation.

Gruver says that he’s drawn to his work by the breadth of technical, operational and logistical challenges. Drawing on his HMC education— “something from nearly every class I took across every discipline”—Gruver has been working on self-driving vehicles since 2009 and did research and development at Google and Otto before coming to Uber.

There’s been a lot of progress, he says, but he acknowledges that there are some fundamental challenges. “When I started in 2009, [the AV industry] was mostly a research area, and companies were just starting to look at turning it into a scalable product. The complexity of the challenge is still large, the business case is still clear and the societal benefits are still significant.”

According to Gruver, there’s been a lot of progress in areas like vehicle platforms, data management and more robust compute architectures, but he sees room for significant advances in sensing, algorithms and system validation.

Argo AI

Founded by former leaders from Google and Uber, Argo AI launched its third-generation, self-driving fleet earlier this year. With major investments from Ford Motor Company and Volkswagen and research partnerships with Georgia Institute of Technology and Carnegie Mellon University, Argo is road testing in four major cities, including Palo Alto, California, where Olivia Watkins ’19 interned.

Olivia Watkins ’19

Detection intern

The joint computer science and mathematics major and NSF Graduate Research Fellowship recipient thinks autonomous vehicles “could be huge in helping reduce driving accidents and saving lives,” she says. “Also, I personally would prefer the convenience of being able to read or sleep in my car while it drives.” During her summer at Argo AI, she worked on helping the self-driving car detect emergency vehicle sirens so it could move out of the way. She notes that while safety is a concern for all of the AV industry, Argo seems to have a special focus on the wellbeing of passengers and the public, utilizing what they call “the world’s most rigorous driving school” for its vehicles. The time she spent at Argo before beginning graduate studies in CS/artificial intelligence at UC Berkeley allowed her to reflect on the ethics of AVs as well. “There are definitely ethical issues around safety, like how robust a system has to be before we put it on the road,” she says. “There’s also a privacy issue regarding the data collected. In my project, for instance, we had to deal with the fact that the microphones we used to pick up sirens might also pick up some human conversations (inside or outside the car), and we spent some time discussing the best way to extract data from these mics without violating people’s privacy.”

May Mobility

May Mobility, an Ann Arbor, Michigan-based startup, is developing self-driving shuttles for environments that require low-speed applications, including college and corporate campuses and central business districts. May—which was founded with $11.5 million seed money from the autonomous vehicle investment departments of Toyota and BMW—develops the software for its vehicles, which it owns and operates. So far, they’ve successfully deployed their shuttles in Columbus, Ohio; Grand Rapids, Michigan; Providence, Rhode Island; and Detroit, Michigan.

Sean Messenger ’15

Senior robotics engineer

Sean Messenger was firmly rooted in academia before he veered into industry via a startup co-founded by Edwin Olson, a professor in the robotics PhD program at the University of Michigan, where Messenger earned a master’s degree in robotics. Under Olson, Messenger worked on mapping and multi-agent autonomy, but he was drawn to Olson’s hands-on approach to the autonomous vehicle market.

“[Olson felt] we should be fulfilling a transportation need, not pursuing a technological curiosity. We should be in this domain, first and foremost, giving value and not just doing a research project. We should be taking the technology we have today and applying it to problems we can solve,” Messenger says.

Messenger, who’s been at May for two years, started out working on the fuel system stack, then on the perception side of what the car “sees.” Now, he specializes in simulation and validation for the autonomous system. “Is there a car in front, a pedestrian on the sidewalk, a dog jumping into the road?” Messenger explains.

“We embrace involving attendants as part of the safe development of autonomous vehicles. We also embrace operating in real environments, with real riders, solving a real need. We have cars driving on public roads in four different cities today taking everyday passengers around. It’s not a niche experience for them. It’s the realization of filling a transportation need and getting people from one place to another.”

May Mobility’s shuttles—Messenger describes them as “souped-up, luxury-style golf carts”—have six seats, some of them turned backward to facilitate socializing (campfire seating), and go between 10 and 25 miles per hour. “We’re not targeting the high-speed market,” he says.

“High-speed operation comes with high risk exposure simply due to the speed of all involved vehicles and pushes the limits of existing sensor technology. Our choice of targeting low-speed operation, on the other hand, allows us to design for some particularly challenging situations, such as stop signs and pedestrian interactions, while leveraging use of sensor technology that already exists,” says Messenger.

Aman Fatehpuria ’18

Field autonomy engineer

Aman Fatehpuria was recruited by Messenger at an HMC Job Fair and went to work at May Mobility after graduation. He travels ahead to the sites where May’s shuttles run before they are deployed and lays the groundwork for a smooth rollout. Fatehpuria also maintains vehicles in the field.

Based in Ann Arbor, he spends about 50 percent of his time traveling to sites, gathering data about an area to send back to May’s robotics team so they can program the vehicles to navigate a specific landscape. He also does considerable outreach to the communities where the shuttles are being deployed.

“I prepare the public by educating them about AVs and try to ease them in because many people are wary about riding in self-driving vehicles,” he says.

His passion project has been the route that opened in Providence, Rhode Island, last May. The shuttle solved a headache for the thousands of commuters who travel Amtrak’s 42-mile route between Boston and Providence every day. After arriving at the Providence Amtrak station, commuters hailed rides to the city’s municipal bus station to complete the commute to their jobs. May’s shuttles linking trains and buses simplified their lives.

“This is a perfect example of solving a real problem with AVs,” says Fatehpuria.

Cruise

Cruise, the self-driving division of General Motors, is designing a car service that can navigate complex urban terrain, such as the streets of San Francisco, where the company is located. Cruise is increasing the size of its test fleet so it can collect enough data to launch its line of safe, self-driving, all-electric vehicles.

Paul Wais ’07

Software engineer (2015–May 2019)

At Cruise, Wais worked both on- and off-car. As an early member of the engineering team, he built the first Apache Spark cluster, which was used to mine data for its computer vision training dataset and for ad-hoc, retrospective, fleet-wide studies. Spark continues to be a useful tool for the data science and product integrity teams responsible for computing and monitoring key top-line fleet metrics.

Wais worked mostly on computer vision while at Cruise. He shipped and served as team lead for Visual Freespace, worked on emergency vehicle recognition and traffic lights, made contributions to the vision-based object detection stack and joined Cruise’s community outreach team as a high school CS instructor. “I also spent about a year working full-stack on vision-based near-field sensing for pedestrians and small obstacles.”

He says perception is the main unknown in driving today. “What sort of deep learning model will it take to detect and forecast the motion of every other player on the road? Nobody has yet built a system that exhibits human-level performance for city or even suburban driving. It is this challenge that sparks the gold rush of engineering effort towards self-driving.”

According to Wais, engineers inside and outside the self-driving space are required to make significant decisions regarding data and model performance. “Within self-driving, there is a race to collect the largest dataset, since perception models underperform safety targets today and it is expected that more data will make the models perform better,” he says. “However, the proposition that more driving will yield better models is only a hypothesis. There are empirical results warranting hope (and exciting large investment), but no theoretical guarantees on the minimum data it will take for a deep model to outperform a human.”

He believes that the hardest problem in the self-driving industry is not that of software but of getting diversely talented individuals to work together as a team. He learned that skill, he says, in the Science, Technology, and Society class at HMC. “It provided excellent preparation for understanding the challenges of teamwork.”

Wais is also concerned about how the self-driving industry handles public safety and is an advocate of open-source software that would allow “concrete, effective conversations about the safety of self-driving systems.”

Ford Motor Company

From the release of the Model T to the introduction of assembly-line production and the eight-hour workday, Ford’s products and business practices have shaped vehicle manufacturing and American society for more than 100 years. The company is working on fully self-driving cars in partnership with Argo AI and Ford AV LLC.

Shreyasha Paudel ’14

Perception research engineer

Having worked as a summer intern at Ford, Paudel had a good understanding of company culture and the types of projects she’d be working on when she joined the company full time in 2016.

“My work is right between research and application,” she says. “I get to read the most recent academic work and interact with the field. And I get to choose how to apply that research into solving some real problems.”

As a research engineer for the sensor fusion and perception team, Paudel develops algorithms to understand the signals from each of the various sensors in the car (cameras, lidar, radar) and combines them in a reliable way so that a driver or a computer can make driving decisions. She says, “My research focuses on robustness in perception algorithms for driving assistance features, so I am trying to understand where our sensors and algorithms work and how to identify when the algorithms may not work properly.”

Paudel is part of a team building automated features for driver assistance systems and autonomous vehicles, improving safety and comfort for passengers. “Self-driving cars bring a lot of advantages in mobility opportunity for people who can’t or do not want to drive,” she says. “We should use our wariness to make sure that the technology is developed safely and with user empowerment and privacy as a focus.”

CARMERA

To drive safely, AVs must be able to navigate the road with high-definition maps that give a detailed representation of the surrounding environment, from stop signs to lane markings. CARMERA is a startup dedicated solely to the HD-mapping challenge.

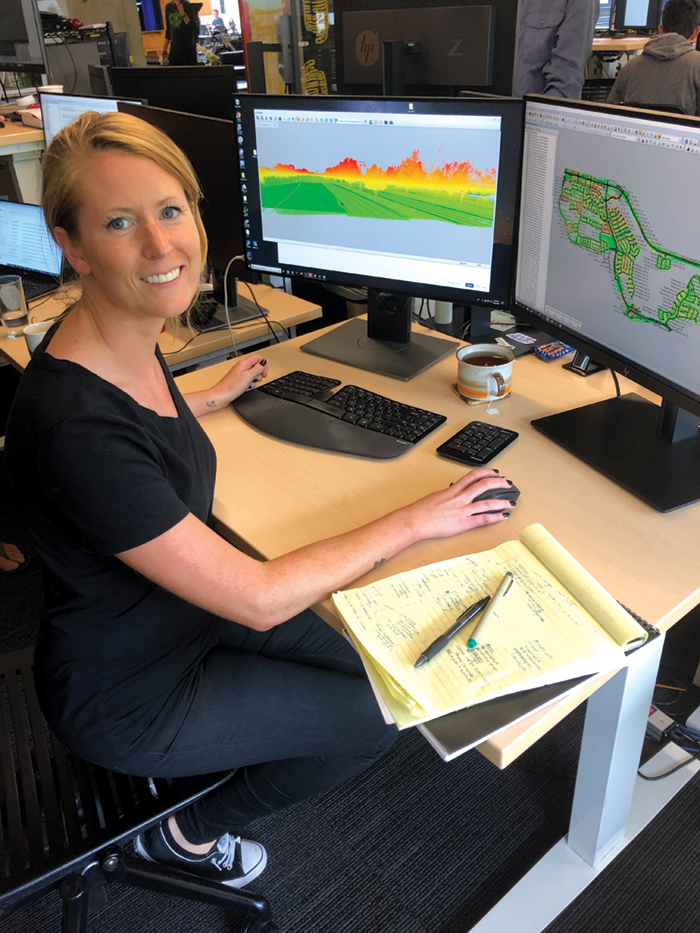

Audrey Lawrence ’11

Engineering manager

Lawrence is somewhat of a veteran in the AV industry. She started at Uber (smartphone sensor data team) then moved to Cruise (data infrastructure team) before joining CARMERA this year. Lawrence now manages geospatial engineers who are responsible for building, storing and updating 3-D maps.

She was attracted to CARMERA’s approach of serving as a map provider for automotive manufacturers, self-driving car services and AV research institutions and its philosophy that sharing data is best for everyone.

HD maps enable the safe operation of self-driving cars by building a detailed representation of the surrounding environment. Drivers know exactly where they are in a lane, zeroing in on an AV’s location to within a few centimeters. The precise maps enable vehicles to navigate complex environments, such as densely populated cities or rough weather conditions, by detecting critical changes in real-time.

“Traditionally, this approach for both localization and navigation has been costly to create and update,” she says. “It requires expensive vehicle hardware to scan the roads, followed by machine and human effort to annotate this data. I believe there will be a number of winners in the self-driving space and that the best approach is to share data for map creation to ensure that all have the most up-to-date and safest data.”

Maps change constantly, so to feed this need for current information, CARMERA partners with fleets across the United States to collect imagery data and detect changes to the underlying map. CARMERA is partnering to scale its map creation infrastructure and deliver custom maps based on a company’s AV platform. The company is also “building out systems to detect map changes and pushing these detection systems to cheaper hardware so devices can be widely deployed,” she says.

Lawrence’s macro view of the industry? “I believe that self-driving cars have the potential to make roads much, much safer.”